|

|

|

|

Virtualized Test Lab Basics with Hyper-V Virtualized Test Lab Basics with Hyper-V

|

|

Date Posted: Aug 31 2009

|

|

Author: Joe

|

|

Index:

|

|

|

Posting Type: Article

|

|

Category: IT Topics

|

|

Page: 2 of 4

|

Article Rank: from 1 Readers

Must Log In to Rank This Article from 1 Readers

Must Log In to Rank This Article

|

|

Forum Discussion Link

|

|

|

|

Virtualized Test Lab Basics with Hyper-V By: Joe

|

The Hardware

The limiting factor in any virtualization project is Memory. Memory is to VM's what water is to fish... they can't get enough of it. Thankfully chipsets and motherboards are starting to support large sums of ram on consumer grade boards with cheap DDR2/3 RAM. CPU's play a secondary role in these systems, far more important is memory.

Now you can go and buy a server class board... and throw 96GB of ram on it and that will do you nicely, but spending $10,000 on a motherboard and ram is a bit out of my scope of this article. We are talking about doing this on the cheap. (relatively.)

Keep in mind that you are not building a benchmark box... you aren't playing Crysis on this thing, you aren't concerned about massive frame rates or insane bus speeds. This is all about getting the most out of your system in a quantity standpoint, not a twitch machine. Certain technologies will definitely aid in the virtualization of guests, but rarely is it the same thing that you would buy for your home machine. I will highlight these technologies as we go through them.

Since Memory type, and amounts are dictated by the motherboard and chipset let's start there.

Motherboards:

I am not going to hide my bias... I love Gigabyte motherboards currently. Every machine in my house runs them, and they run cool, and stable even when OC'd nicely. But with that disclosure out there....

Some factors to take into account when choosing a board for a Virtualization server:

- Chipset - this will determine the features available, performance, memory capacity, Memory type and in some cases overclockability.

- Memory Capacity - Part of the above, but still important on its own. Note speeds supported, capacity, and read reviews on if its stable loaded to the max. That was a common problem a few years ago where no board would work good with all the memory slots full.

- CPU Compatibility - What socket/CPU brand and type are supported.

- Onboard NICS - How many? You want more than one.

- Onboard SATA connections - More the better, and all should be SATA2 and support some form of RAID.

- PCIe 16x, 8x and 4x slots - For adding in RAID controllers, high speed NICS, etc...

- Onboard Video - this isn't offered too often on the top of the line motherboards.

Here is a picture of my VM Lab server:

The motherboard I am using is a Gigabyte GA-EP45-UD3P The reason I went with it are:

- Intel P45 chipset - Cool running, supports 16GB ram, and is fast.

- Dual onboard NICs

- 8 Onboard SATA connections, all supporting some form of RAID.

- 2 PCIe x16 connectors so I can get a RAID controller and a NIC in if I need. (and as you can see there is a RAID card in there)

- TPM support - Trusted Platform Module - this is a chip that Windows Bit Locker can use to essentially encrypt your entire server. I played with it a bit, but it hurt performance too much so I disabled it and removed the encryption from the system.

- The Gigabyte Ultra Durable 3 construction - I feel these boards are way more robust than some others I have used. The Advanced Dynamic Energy Saver is a great feature to keep power costs down.

The motherboard is great, works flawlessly, and runs 24/7 without issue. The CPU is OC'd (Q9300) running at 1600FSB, on nearly stock voltage and never barks. Notice the stock HSF in the pic above.

Chipsets and Memory type - Each chipset has a preferred memory technology it likes to use, the P45 supports DDR3 or DDR2, this board only supports DDR2. When doing your shopping keep in mind DDR2 is easier to come by in larger quantities, and is cheaper currently.

You may notice this is a LGA775 board, and not one of the new Core i7 Nehalem CPU based systems. The reason for this is the Nehalem hardware at the time was too hot running, too power hungry, and while it does offer some serious performance increases for virtualization. The DDR3 memory is impossible to come by in large enough sizes at reasonable prices and is just not cost effective yet (mid 2009). When DDR3 prices drop, the Nehalem based technology will be great to go with and support more memory with DDR3(24GB+) than the latest itterations of DDR2 (16GB).

Memory:

Now that we have chosen our motherboard... now comes the hard part. Buying RAM that not only has the specs you want, but is available, cheap, and supported on the motherboard.

I am running an OCZ 16GB DDR2-800(4x4GB) Gold series Kit. I got it off Amazon for under $400 back in spring of '09. It seems this is still very hard to find and is out of stock everywhere (all 16GB kits). Newegg hasn't had a kit for a reasonable price for months. I have also had luck with Geil memory kits in the past.

Quantity: Buy on size. 16GB is the least you should put into any serious lab host. 16GB is enough to allow you to run 6 - 10 big beefy Guests easily. and many more smaller ones. 16GB is the max size for P45 chipset boards. If you are going to the DDR3 i7 hardware 24GB is your max. 4GB DDR3 chips are almost impossible to find at the time of this writing. If you can buy the RAM as a kit, do it. You know then that the 4 chips in the kit were tested to work together and operate at similar voltages.

Quality: Buy good ram... not great ram, not super fast ram, just good ram from a good company with a solid warranty. I bought OCZ 16GB Gold Kit

Speed: DDR2-800 is a solid speed, you don't need more (and in fact you won't find much faster in a 16GB kit)

CPU:

This is an area that I think many would fret over, but in reality plays little of a role in a home test lab server. If you are deploying enterprise VM servers, then this is incredibly important, but not for us. Only thing that is critically important is the DEP/Nx features and the Intel TV/ AMD-V functionality.

I run a Retail Intel Q9300 clocked at 3Ghz with the stock HSF. You don't need a big fancy expensive HSF, the CPU's don't put out enough heat to warrant it and since the machine is largely idle most of the time there is hardly a time where the CPU is at 100% load for an extended period.

The Q9300 seems to be on the way out as far as availability goes, I would recommend a Q9550 now. It has more cache and runs stock at 3Ghz. Cache does make a difference for VM environments so get more of it if you can.

A word about the Core i7 chips. Intel and AMD are both releasing new virtualization technologies in their newest line of chips. Intel specifically has a technology called Extended Page Tables (EPT). This technology dramatically shrinks the overhead on the host, and increases efficiency across the entire Hypervisor. There are many other technologies coming out. In an enterprise environment EPT and others play a big role in high VM densities. For a test lab though that is a minor thing. I would wait until the second gen of the Nehalem architecture is released with lower power consumption and lower heat output and the additional VM enhancements that are coming along.

You will want a CPU with Quad cores, but beyond that... have fun. Intel and AMD are comparable, but one BIG word of warning:

Not All Core 2 Duo or Quad Intel CPU's Support Virtualization

How pissed off would you be if you just bought a nice lil Quad core CPU from Intel just to find out that it had the Intel VT-x instructions disabled?! Well its happened to many folks. From this link: http://ark.intel.com/VTList.aspx You can get a listing of what Cores support virtualization and which do not.

In short DO NOT buy a CPU in these series:

Intel Core 2 Duo - 4xxx,5xxx,72xx,73xx,74xx,75xx,81xx

Intel Core 2 Quad - 82xx, 83xx

Most all AMD AM2/AM3 socket CPU's have this feature available even down to the $39.00 sempron 140! But still check to make sure before you buy.

There is NO WAY to get Hyper-V to run without this feature.

Disk:

A common misconception in the load out of disks on VM servers that I see most often is the building of large monolithic stripe RAID 0 or RAID 5 sets to hold all of the guests. I am going to explain why that's a bad idea in practice.

On your home PC, when you run a Windows Update, or install an application or an OS for that matter, your disk get thrashed nicely doesn't it? Even if you have a big powerful stripe array it still gets beat down, it just gets the job done faster.

Now imagine doing that a few times... at the same time on the same disks, you think performance will be good just because you threw a bunch of disks in an array? No. Disk queue lengths will sky rocket, speeds will plummet, and you will be sad.

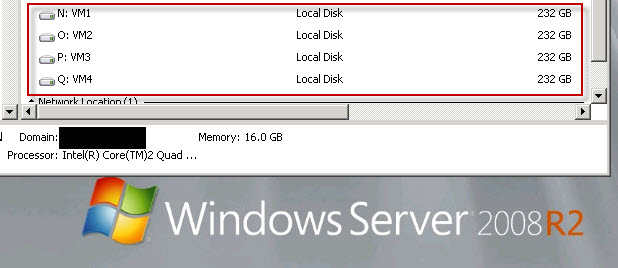

Since we are talking about a lab here, there isn't much that is "mission critical", so we really don't need to concern ourselves with RAID, or any kind of high end redundancy for the lab guests. Because of that, let's keep this simple. Below is an image of the front of my server:

The small 2.5" bays are for the OS/System (6 Disk RAID 10), the disks to focus on are the big 3.5" hot swap drives at the top. 4 of the 5 bays hold my VM disks, one holds a daily backup disk and when I do monthly backups the drives for that are put in that slot. The 4 VM disks are just JBOD, 250GB 7200RPM Seagate disks. Nothing special. They are connected to the onboard SATA controller.

The layout in the Host is this:

The logic behind this:

Most lab setups are 2 - 6 VM's from my experience, most being 4 or so. SO what I do is when I provision my Guests I spread them out across the 4 disks. That way I have full I/O isolation between these VM's which are normally pretty IO intense when they are first being configured. This removes the chance one VM can slow down all the rest. Even when I do double up on a drive the performance isn't that bad.

Even if I have 2 6 machine labs running I am normally only doing high I/O functions on one set of machines at a time, so they all share disks with other possibly live machines, but rarely do they contest for I/O time with one another based on the break out.

Now in my server you see the big bad ass Areca 1230 12x SATA2 RAID card (1GB cache and BBU), nothing for the VM system runs on that card. That raid controller runs my OS/System RAID 10 array, and my media disks, that's it. There is no need to invest in a card like that just for a VM server.

I may add on an external eSATA cage at some point for more VM disks, but that's down the road. I have run 17 VM Guests at once in a big lab I made a while ago, and the 4 disks were enough to handle even that.

Network:

This is something optional as you can have all your VM's running isolated on the host only configuration if you want. But most people will want their guests to be able to access the internet and other systems.

Rule of thumb is you keep your Host NIC separate from the Guest NIC. You dedicate one of the NICS just to the guest VM network. If you are using iSCSI connections, an additional NIC should be installed for that.

In general for the purposes of a lab, the NICs don't matter. In the enterprise world though there are real changes coming with virtualization specific offloading NICs like the Intel Pro 1000VT:

That NIC is specialized to offload TCP IPv4 and IPv6 traffic from VM guests directly. Offloading the host CPU. This is part of Intel's plan to have the Disk/Network/Chipset/CPUs all tuned to accelerate and offload as much of the Virtualization load from the host as possible. This is just eye candy for the most part as that isn't required in a test lab. And it costs over $800.00. But hey if yer rich, or need the best of the best - there you go!

Enclosure:

The case is important once you know how many disks, and the scale of your system. If you look at the server pic above, I am running an Antec P182 case. I got it on sale at Newegg. The thing to remember is the case could end up VERY heavy if you load it with disk.

The 182 case gave me the ability to try and route most of the wires cleanly behind the motherboard tray. Now I know there are still tons of wires visible, but for the volume of wires (for 17 hard drives) it's not too bad.

From my case you can see the multiple external drive bays loaded with disk and the lack of a DVD-rom. I ran out of space to do what I wanted. Most of which is out of scope of this article since those disk are not used for the VM environment. But you can see you need to be careful on which case you pic to ensure everything fits.

The 5x 3.5" hot swap bay is an Icy Dock MB455SPF-B I never swap the VM drives out, but I guess I could if I wanted. They are there just because I ran out of internal locations.

Any case you pick up should have large slow spinning fans. Most of us will keep these servers within ear shot so keeping them quiet and stealth like is key. Using a good low power CPU will definitely help control the heat output of the machine.

Power Supply:

Once again you are not building the end all be all gaming rig with 3 monster graphic cards. So save your money on the massive 1Kw PSU, and get something more reasonable.

In most cases these servers will be running 24/7 so efficiency is what you should be looking for. Something with this logo:

That logo means the PSU is 80% or greater efficient at converting and transferring power from your power cord to the innards of your rig. The PSU I am running is an older but still good Antec NeoHE 460watt PSU. Which is incredibly low compared to the 600 watt PSU's that are the min spec these days. But that PSU still runs this server, with its 17 drives without fail.

They don't make that PSU anymore, but there are plenty better ones out there now. I would stay in the 600Watt range for a good efficient one and modular cables keep the mess under control in the case.

Video:

Last and certainly least... Video. Here's the deal, either you get a motherboard with onboard video, or buy a PCI video card. Yep you heard me right, a plain, PCI VGA card... The reason for this is:

- You don't want a powerful vid card eating up juice doing nothing and generating lots of heat.

- You don't want to eat up a valuable PCIe slot if you don't need to.

- Doesn't everyone have one of these in some box in the basement?!

In my server pic you can see I am running some small POS PCI card. For the few minutes a year its used (I use remote desktop to control the server), it's not worth a serious investment.

That about wraps up all parts of the server from a hardware perspective. Next let's talk a bit about the software.

|

|

|

|

|

| Random Forum Pic |

|

| From Thread: Wiring info for 12v blower mod Please!! |

|

| | ProCooling Poll: |

| So why the hell not? |

|

I agree!

|

67% 67%

|

|

What?

|

17% 17%

|

|

Hell NO!

|

0% 0%

|

|

Worst Poll Ever.

|

17% 17%

|

Total Votes:18Please Login to Vote!

|

|

Virtualized Test Lab Basics with Hyper-V

Virtualized Test Lab Basics with Hyper-V

from 1 Readers

Must Log In to Rank This Article

from 1 Readers

Must Log In to Rank This Article